Combining Different Models for Ensemble Learning

本章将基于前几章学到的内容和技术,用多个的分类器构建成一个分类器,来获得比任何单一模型更好的效果。

- 基于多数投票算法的预测

- 通过随机抽取组合训练数据集降低过拟合

- 通过学习弱模型(weak learners)的差错构建更强的模型

Learning with ensembles

集成算法(ensemble methods)的目的是将多个不同类型的分类器组成一个分类器,获得优于任何一个单个分类器的泛化表现。集成算法有多种技术,本节我们将介绍最基本的方法并了解为何能够获得较好的泛化性能。

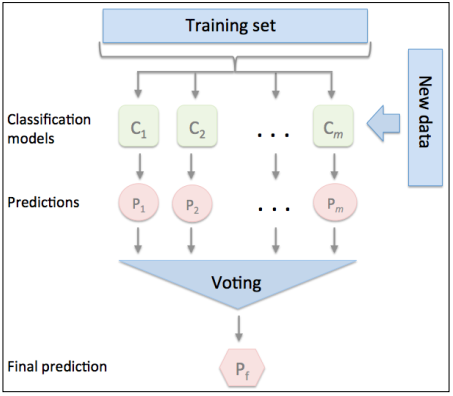

最流行的集成算法是多数投票算法,原理是每个样本的最终分类取决于50%以上的分类器预测。严格意义上,多数投票仅针对与二元分类。但也能够轻易地改造用于多元分类问题,称作相对多数投票(plurality voting)。

从训练数据,我们从训练m个不同的分类器(C1,…,Cm),例如决策树、支持向量机、逻辑回归等,当然可以使用同个分类器在不同的训练子集上学习。下图是一个使用多数投票的示意图:

Implementing a simple majority vote classifier

让我们先实现一个简单的集成分类器算法作为热身。该算法支持基于各自的置信度权重来组合不同的分类器算法。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123from sklearn.base import BaseEstimator

from sklearn.base import ClassifierMixin

from sklearn.preprocessing import LabelEncoder

from sklearn.externals import six

from sklearn.base import clone

from sklearn.pipeline import _name_estimators

import numpy as np

import operator

class MajorityVoteClassifier(BaseEstimator, ClassifierMixin):

""" A majority vote ensemble classifier

Parameters

----------

classifiers : array-like, shape = [n_classifiers]

Different classifiers for the ensemble

vote : str, {'classlabel', 'probability'}

Default: 'classlabel'

If 'classlabel' the prediction is based on the argmax of class labels.

Else if 'probability', the argmax of the sum of propabilities is used

to predict the class label (recommended for calibrated classifiers).

weights : array-like, shape = [n_classifiers]

Optional, default: None

If a list of `int` or `float` values are provided, the classifiers are weighted by

importance; Uses uniform weights if `weights=None`.

"""

def __init__(self, classifiers, vote='classlabel', weights=None):

self.classifiers = classifiers

self.named_classifiers = {key: value for key, value in

_name_estimators(classifiers)}

self.vote = vote

self.weights = weights

def fit(self, X, y):

""" Fit classifiers.

Parameters

----------

X : {array-like, spars matrix},

shape = [n_samples, n_features]

Matrix of training samples.

y : array-like, shape = [n_samples]

Vector of target class labels.

Returns

-------

self : object

"""

# Use LabelEncoder to ensure class labels start

# with 0, which is important for np.argmax

# call in self.predict

self.lablenc_ = LabelEncoder()

self.lablenc_.fit(y)

self.classes_ = self.lablenc_.classes_

self.classifiers_ = []

for clf in self.classifiers:

fitted_clf = clone(clf).fit(X, self.lablenc_.transform(y))

self.classifiers_.append(fitted_clf)

return self

def predict(self, X):

""" Predict class labels fro X.

Parameters

----------

X : {array-like, sparse matrix},

Shape = [n_samples, n_features]

Matrix of traning samples.

Returns

-------

maj_vote : array-like, shape = [n_samples]

Predicted class labels.

"""

if self.vote == 'probability':

may_vote = np.argmax(self.predict_proba(X), axis=1)

else: # 'classlabel' vote

# Collect results from clf.predict calls

predictions = np.asarray([clf.predict(X) for clf in

self.classifiers_]).T

maj_vote = np.apply_along_axis(lambda x: np.argmax(np.bincount(x,

weights=self.weights)),

axis=1,

arr=predictions)

maj_vote = self.lablenc_.inverse_transform(maj_vote)

return maj_vote

def predict_proba(self, X):

""" Predict class probabilities for X.

Parameters

----------

X : {array-like, sparse matrix},

shape = [n_samples, n_features]

Training vectors, where n_samples is the number of samples and

n_features is the number of features.

Returns

-------

avg_proba : array-like,

shape = [n_samples, n_classes]

Weighted average probability for each class per sample.

"""

probas = np.asarray([clf.predict_proba(X) for clf in self.classifiers_])

avg_proba = np.average(probas,

axis=0,

weights=self.weights)

return avg_proba

def get_params(self, deep=True):

""" Get classifier parameter names for GridSearch """

if not deep:

return super(MajorityVoteClassifier, self).get_params(deep=False)

else:

out = self.named_classifiers.copy()

for name, step in\

six.iteritems(self.named_classifiers):

for key, value in six.iteritems(step.get_params(deep=True)):

out['%s__%s' % (name, key)] = value

return out

Combining different algorithms for classification with majority vote

为了让分类工作更具挑战性,我们选取Iris数据中的两个特征sepal width和petal lengeh,并且仅仅区分两个分类Iris-Versicolor和Iris-Virginica,并计算ROC AUC。1

2

3

4

5

6

7

8

9

10from sklearn import datasets

from sklearn.cross_validation import train_test_split

from sklearn.preprocessing import StandardScaler, LabelEncoder

iris = datasets.load_iris()

X, y = iris.data[50:, [1, 2]], iris.target[50:]

le = LabelEncoder()

y = le.fit_transform(y)

X_train, X_test, y_train, y_test = train_test_split(X, y,

test_size=0.5,

random_state=1)

现在我们来训练三个不同的分类器:逻辑回归、决策树和KNN,通过10-fold交叉检验来看下各自的表现。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20from sklearn.cross_validation import cross_val_score

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.neighbors import KNeighborsClassifier

from sklearn.pipeline import Pipeline

import numpy as np

clf1 = LogisticRegression(penalty='l2', C=0.001, random_state=0)

clf2 = DecisionTreeClassifier(max_depth=1, criterion='entropy', random_state=0)

clf3 = KNeighborsClassifier(n_neighbors=1, p=2, metric='minkowski')

pipe1 = Pipeline([['sc', StandardScaler()], ['clf', clf1]])

pipe3 = Pipeline([['sc', StandardScaler()], ['clf', clf3]])

clf_labels = ['Logistic Regression', 'Decision Tree', 'KNN']

print('10-fold cross validation:\n')

for clf, label in zip([pipe1, clf2, pipe3], clf_labels):

scores = cross_val_score(estimator=clf,

X=X_train,

y=y_train,

cv=10,

scoring='roc_auc')

print("ROC SUC: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

Output:1

2

3

4

510-fold cross validation:

ROC SUC: 0.92 (+/- 0.20) [Logistic Regression]

ROC SUC: 0.92 (+/- 0.15) [Decision Tree]

ROC SUC: 0.93 (+/- 0.10) [KNN]

接下来用我们的MajorityVoteClassifier用多数投票算法来整合不同的分类器:1

2

3

4

5

6

7

8

9

10mv_clf = MajorityVoteClassifier(classifiers=[pipe1, clf2, pipe3])

clf_labels += ['Majority Voting']

all_clf = [pipe1, clf2, pipe3, mv_clf]

for clf, label in zip(all_clf, clf_labels):

scores = cross_val_score(estimator=clf,

X=X_train,

y=y_train,

cv=10,

scoring='roc_auc')

print("Accuracy: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

Output:1

2

3

4Accuracy: 0.92 (+/- 0.20) [Logistic Regression]

Accuracy: 0.92 (+/- 0.15) [Decision Tree]

Accuracy: 0.93 (+/- 0.10) [KNN]

Accuracy: 0.97 (+/- 0.10) [Majority Voting]

Evaluating and tuning the ensemble classifier

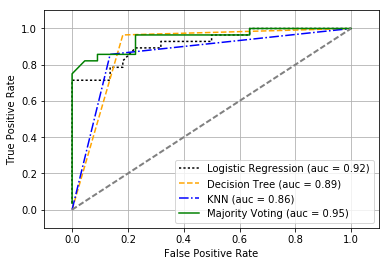

通过ROC曲线看下多数投票算法在未知数据上的表现如何:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22import matplotlib.pyplot as plt

from sklearn.metrics import roc_curve, auc

colors = ['black', 'orange', 'blue', 'green']

linestyles = [':', '--', '-.', '-']

for clf, label, clr, ls in zip(all_clf, clf_labels, colors, linestyles):

# assuming the label of the positive class is 1

y_pred = clf.fit(X_train, y_train).predict_proba(X_test)[:, 1]

fpr, tpr, thresholds = roc_curve(y_true=y_test, y_score=y_pred)

roc_auc = auc(x=fpr, y=tpr)

plt.plot(fpr, tpr, color=clr, linestyle=ls,

label='%s (auc = %0.2f)' % (label, roc_auc))

plt.legend(loc='lower right')

plt.plot([0, 1], [0, 1],

linestyle='--',

color='gray',

linewidth=2)

plt.xlim([-0.1, 1.1])

plt.ylim([-0.1, 1.1])

plt.grid()

plt.xlabel('False Positive Rate')

plt.ylabel('True Positive Rate')

plt.show()

集成算法在测试机上表现不错(ROC AUC=0.95)。

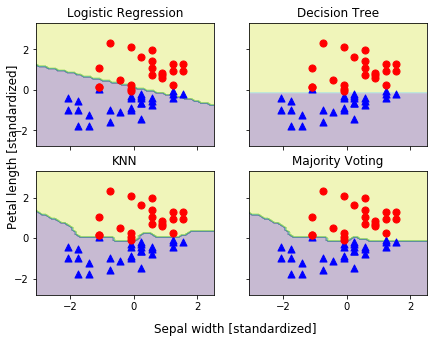

因为我们选取了仅仅两个特征,可以看下集成算法的分类界面如何,因为模型中已经带有标准化管道,这里的标准化是为了显示。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33sc = StandardScaler()

X_train_std = sc.fit_transform(X_train)

from itertools import product

x_min = X_train_std[:, 0].min() - 1

x_max = X_train_std[:, 0].max() + 1

y_min = X_train_std[:, 1].min() - 1

y_max = X_train_std[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1),

np.arange(y_min, y_max, 0.1))

f, axarr = plt.subplots(nrows=2, ncols=2, sharex='col', sharey='row',

figsize=(7, 5))

for idx, clf, tt in zip(product([0, 1], [0, 1]), all_clf, clf_labels):

clf.fit(X_train_std, y_train)

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

axarr[idx[0], idx[1]].contourf(xx, yy, Z, alpha=0.3)

axarr[idx[0], idx[1]].scatter(X_train_std[y_train==0, 0],

X_train_std[y_train==0, 1],

c='blue',

marker='^',

s=50)

axarr[idx[0], idx[1]].scatter(X_train_std[y_train==1, 0],

X_train_std[y_train==1, 1],

c='red',

marker='o',

s=50)

axarr[idx[0], idx[1]].set_title(tt)

plt.text(-3.5, -4.5, s='Sepal width [standardized]',

ha='center', va='center', fontsize=12)

plt.text(-10.5, 4.5, s='Petal length [standardized]',

ha='center', va='center', fontsize=12, rotation=90)

plt.show()

在我们尝试调试集成算法中每个分类器参数时。可以调用get_params方法查看GridSearch对象内部参数的使用方法:1

mv_clf.get_params()

Output:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74{'decisiontreeclassifier': DecisionTreeClassifier(class_weight=None, criterion='entropy', max_depth=1,

max_features=None, max_leaf_nodes=None,

min_impurity_split=1e-07, min_samples_leaf=1,

min_samples_split=2, min_weight_fraction_leaf=0.0,

presort=False, random_state=0, splitter='best'),

'decisiontreeclassifier__class_weight': None,

'decisiontreeclassifier__criterion': 'entropy',

'decisiontreeclassifier__max_depth': 1,

'decisiontreeclassifier__max_features': None,

'decisiontreeclassifier__max_leaf_nodes': None,

'decisiontreeclassifier__min_impurity_split': 1e-07,

'decisiontreeclassifier__min_samples_leaf': 1,

'decisiontreeclassifier__min_samples_split': 2,

'decisiontreeclassifier__min_weight_fraction_leaf': 0.0,

'decisiontreeclassifier__presort': False,

'decisiontreeclassifier__random_state': 0,

'decisiontreeclassifier__splitter': 'best',

'pipeline-1': Pipeline(steps=[['sc', StandardScaler(copy=True, with_mean=True, with_std=True)], ['clf', LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)]]),

'pipeline-1__clf': LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False),

'pipeline-1__clf__C': 0.001,

'pipeline-1__clf__class_weight': None,

'pipeline-1__clf__dual': False,

'pipeline-1__clf__fit_intercept': True,

'pipeline-1__clf__intercept_scaling': 1,

'pipeline-1__clf__max_iter': 100,

'pipeline-1__clf__multi_class': 'ovr',

'pipeline-1__clf__n_jobs': 1,

'pipeline-1__clf__penalty': 'l2',

'pipeline-1__clf__random_state': 0,

'pipeline-1__clf__solver': 'liblinear',

'pipeline-1__clf__tol': 0.0001,

'pipeline-1__clf__verbose': 0,

'pipeline-1__clf__warm_start': False,

'pipeline-1__sc': StandardScaler(copy=True, with_mean=True, with_std=True),

'pipeline-1__sc__copy': True,

'pipeline-1__sc__with_mean': True,

'pipeline-1__sc__with_std': True,

'pipeline-1__steps': [['sc',

StandardScaler(copy=True, with_mean=True, with_std=True)],

['clf',

LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)]],

'pipeline-2': Pipeline(steps=[['sc', StandardScaler(copy=True, with_mean=True, with_std=True)], ['clf', KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform')]]),

'pipeline-2__clf': KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform'),

'pipeline-2__clf__algorithm': 'auto',

'pipeline-2__clf__leaf_size': 30,

'pipeline-2__clf__metric': 'minkowski',

'pipeline-2__clf__metric_params': None,

'pipeline-2__clf__n_jobs': 1,

'pipeline-2__clf__n_neighbors': 1,

'pipeline-2__clf__p': 2,

'pipeline-2__clf__weights': 'uniform',

'pipeline-2__sc': StandardScaler(copy=True, with_mean=True, with_std=True),

'pipeline-2__sc__copy': True,

'pipeline-2__sc__with_mean': True,

'pipeline-2__sc__with_std': True,

'pipeline-2__steps': [['sc',

StandardScaler(copy=True, with_mean=True, with_std=True)],

['clf',

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform')]]}

我们通过之前学习的网格搜索来调试下逻辑回归的参数C和决策树的深度。1

2

3

4

5

6

7

8

9

10from sklearn.grid_search import GridSearchCV

params = {'decisiontreeclassifier__max_depth': [1, 2],

'pipeline-1__clf__C': [0.001, 0.1, 100.0]}

grid = GridSearchCV(estimator=mv_clf,

param_grid=params,

cv=10,

scoring='roc_auc')

grid.fit(X_train, y_train)

for params, mean_score, scores in grid.grid_scores_:

print("%0.3f +/- %0.2f %r" % (mean_score, scores.std() / 2, params))

Output:1

2

3

4

5

60.967 +/- 0.05 {'decisiontreeclassifier__max_depth': 1, 'pipeline-1__clf__C': 0.001}

0.967 +/- 0.05 {'decisiontreeclassifier__max_depth': 1, 'pipeline-1__clf__C': 0.1}

1.000 +/- 0.00 {'decisiontreeclassifier__max_depth': 1, 'pipeline-1__clf__C': 100.0}

0.967 +/- 0.05 {'decisiontreeclassifier__max_depth': 2, 'pipeline-1__clf__C': 0.001}

0.967 +/- 0.05 {'decisiontreeclassifier__max_depth': 2, 'pipeline-1__clf__C': 0.1}

1.000 +/- 0.00 {'decisiontreeclassifier__max_depth': 2, 'pipeline-1__clf__C': 100.0}

当逻辑回归算法正则化参数降低时,决策树的深度已经不影响性能。由于多次使用测试数据来评估模型不是最佳实践,我们将使用另外一种集成学习方法:分袋(bagging)。

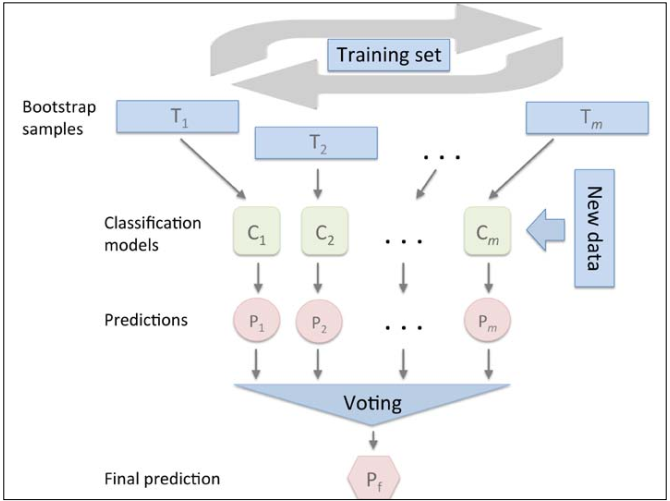

Bagging - building an ensemble of classifiers from bootstrap samples

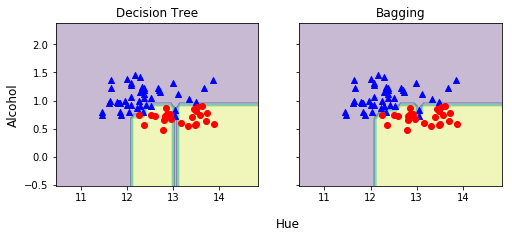

分袋算法是与多数投票相关的一种集成学习算法,其示意图如下:

分袋算法中,不使用同一个训练集来训练集成模型中的单个分类器,而是使用有放回的随机抽样样本,又称为引导聚集(bootstrap aggregating)。我们将使用Wine数据来创建一个较复杂的分类算法,这里我们仅仅考虑分类2和3,并且只适用两个特征Alcohol和Hue。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22import pandas as pd

df_wine = pd.read_csv('https://archive.ics.uci.edu/ml/machine-learning-databases/wine/wine.data',

header=None)

df_wine.columns = ['Class label', 'Alcohol',

'Malic acid', 'Ash',

'Alcalinity of ash',

'Magnesium', 'Total phenols',

'Flavanoids', 'Nonflavanoid phenols',

'Proanthocyanins',

'Color intensity', 'Hue',

'OD280/OD315 of diluted wines',

'Proline']

df_wine = df_wine[df_wine['Class label'] != 1]

y = df_wine['Class label'].values

X = df_wine[['Alcohol', 'Hue']].values

from sklearn.preprocessing import LabelEncoder

from sklearn.cross_validation import train_test_split

le = LabelEncoder()

y = le.fit_transform(y)

X_train, X_test, y_train, y_test =\

train_test_split(X, y, test_size=0.40, random_state=1)

我们将数据按照60:40比例分成训练和测试集后,使用scikit-learn实现的BaggingClassifier算法来集成500棵未剪枝的决策树,先看下单独的未剪枝决策树在训练集和测试集上的准确度。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19from sklearn.ensemble import BaggingClassifier

tree = DecisionTreeClassifier(criterion='entropy', max_depth=None)

bag = BaggingClassifier(base_estimator=tree,

n_estimators=500,

max_samples=1.0,

max_features=1.0,

bootstrap=True,

bootstrap_features=False,

n_jobs=1,

random_state=1)

from sklearn.metrics import accuracy_score

tree = tree.fit(X_train, y_train)

y_train_pred = tree.predict(X_train)

y_test_pred = tree.predict(X_test)

tree_train = accuracy_score(y_train, y_train_pred)

tree_test = accuracy_score(y_test, y_test_pred)

print('Decision tree train/test accuracies %.3f/%.3f' %

(tree_train, tree_test))

Output:1

Decision tree train/test accuracies 1.000/0.854

决策树在训练集上分类全部正确,但是在测试机上精准度较低,显示出过拟合。1

2

3

4

5

6

7bag = bag.fit(X_train, y_train)

y_train_pred = bag.predict(X_train)

y_test_pred = bag.predict(X_test)

bag_train = accuracy_score(y_train, y_train_pred)

bag_test = accuracy_score(y_test, y_test_pred)

print('Bagging train/test accuracies %.3f/%.3f' %

(bag_train, bag_test))

分袋集成算法在测试机上有更好的表现,下面我们来比较下两个算法的分类界面:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25x_min = X_train[:, 0].min() - 1

x_max = X_train[:, 0].max() + 1

y_min = X_train[:, 1].min() - 1

y_max = X_train[:, 1].max() + 1

xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1),

np.arange(y_min, y_max, 0.1))

f, axarr = plt.subplots(nrows=1, ncols=2,

sharex='col',

sharey='row',

figsize=(8, 3))

for idx, clf, tt in zip([0, 1], [tree, bag], ['Decision Tree', 'Bagging']):

clf.fit(X_train, y_train)

Z = clf.predict(np.c_[xx.ravel(), yy.ravel()])

Z = Z.reshape(xx.shape)

axarr[idx].contourf(xx, yy, Z, alpha=0.3)

axarr[idx].scatter(X_train[y_train==0, 0],

X_train[y_train==0, 1],

c='blue', marker='^')

axarr[idx].scatter(X_train[y_train==1, 0],

X_train[y_train==1, 1],

c='red', marker='o')

axarr[idx].set_title(tt)

axarr[0].set_ylabel('Alcohol', fontsize=12)

plt.text(10.2, -1.2, s='Hue', ha='center', va='center', fontsize=12)

plt.show()

分袋算法有效降低模型的方差,但是对于降低模型的偏差没有助益。

Leveraging weak learners via adaptive boosting

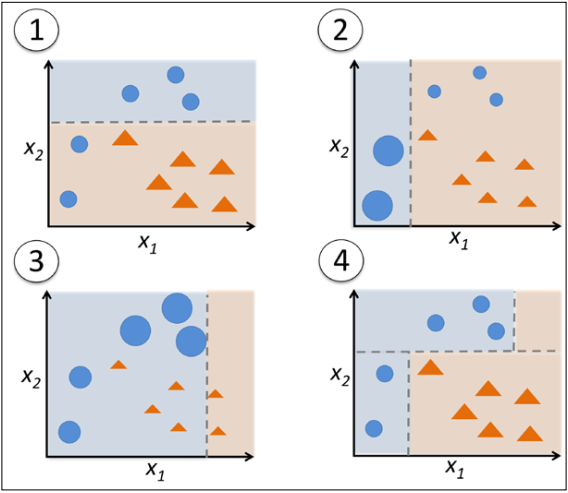

本节将讨论boosting增强算法中最普遍使用的自适应boosting(AdaBoost,Adaptive Boosting)。其增强算法的基本流程如下:

- 从训练数据D中不放回随机抽样训练子集d1,并在其上训练一个弱模型C1

- 从D中不放回随机抽取第二份训练子集并加入第一次错分类的50%样本作为d2,在其上训练一个弱模型C2

- 在D中选取C1和C2分类不一致的样本d3,并训练第三个弱模型C3

- 通过多数投票算法组合三个弱模型

相比分袋算法,增强算法不仅能降低偏差也能够降低方差。而AdaBoost使用整个训练集来训练弱模型,实时每次迭代将调整样本的权重,提升分类错误的样本的比例来逐步训练一个强模型。下图是一个AdaBoost分类算法的训练过程示意图:

我们仍然使用Wine数据来训练一个AdaBoost集成分类器,通过base_estimator属性,我们将训练500棵决策树:1

2

3

4

5

6

7

8

9

10

11

12

13from sklearn.ensemble import AdaBoostClassifier

tree = DecisionTreeClassifier(criterion='entropy', max_depth=1)

ada = AdaBoostClassifier(base_estimator=tree,

n_estimators=500,

learning_rate=0.1,

random_state=0)

tree = tree.fit(X_train, y_train)

y_train_pred = tree.predict(X_train)

y_test_pred = tree.predict(X_test)

tree_train = accuracy_score(y_train, y_train_pred)

tree_test = accuracy_score(y_test, y_test_pred)

print('AdaBoost train/test accuracies %.3f/%.3f' %

(tree_train, tree_test))

决策树在训练集上过拟合。1

2

3

4

5

6

7ada = ada.fit(X_train, y_train)

y_train_pred = ada.predict(X_train)

y_test_pred = ada.predict(X_test)

ada_train = accuracy_score(y_train, y_train_pred)

ada_test = accuracy_score(y_test, y_test_pred)

print('AdaBoost train/test accuracies %.3f/%.3f' %

(ada_train, ada_test))

AdaBoost模型预测全部正确,但是在降低模型偏差的情况下,方差有所增加。